Who hides behind a mask (secret, secret; I’ve got a secret)

So no one else can see (secret, secret; I’ve got a secret)

My true identity!

- “Mr. Roboto” by Styx

Happy Golden Years

Twenty years ago, you would be reading this article on myspace or xanga with a beautiful midi rendition of “Mr. Roboto” by Styx playing on loop. The 90's and early 00's were a great time for IT. We had Napster, AOL Instant Messenger, and a guy named Geoff running your corporate webpage, intranet, phone system, and tech support for ClarisWorks.

Today Geoff is a CTO with five or six departments handling the various aspects of those same needs he took care of just twenty short years ago. Certainly, processes have changed over the years, because the product both in and the product out has changed. We are better at securing data; all of our (plain text) secrets are no longer living next door in /var/www/.htaccess. We know we need to keep track of certificates, tokens, and database access keys. None of us want our companies showing up in the headlines as the next big data breach. Arguably, however, there are some habits and attitudes that are still lingering from the early days that still exist because “we are just waiting for Earl to retire” or “it would cost far too much to ask the devs to retool everything.” Stick around, we will discuss those attitudes and look at ways to start the paradigm shift to better practices, repeatable methods, and a better night’s sleep.

The Perfect World

There are many reasons to move secrets to a different abstraction layer, and in the perfect world, those are utilized by our applications, natively. Namely, in Kubernetes, that means containerized apps can utilize injected passwords, tokens, certificates, etc from variables and files from a secrets object. These secret objects should then be managed by a specific team or by a secrets management system like Google Secret Manager, Azure Key Vault, AWS Key Management Service, or HashiCorp Vault. Role-based access control (RBAC) should then be applied to the secret objects so that only those people directly responsible have access to list, edit, create, etc.

Security

This may seem like the obvious one, but obfuscating and abstracting secrets out of the normal operating and development space is the easiest way to also keep them out of consumer space, genius, I know. What about all those repositories we use to collaborate and apply version control? It is a lot easier to keep tokens and passwords from being accidentally pushed to the wrong place if they were never in the code in the first place. In a similar way, it allows companies to collaborate with consultants and outside developers without giving away the secret sauce.

Simplification

Abstraction of any sort can vastly simplify code. If we look at the life cycle of an application as it moves from local development through staging and QA finally into the production environment, it is important that the code at each level does-not-change. If a human being is manually changing connections, passwords, etc at each stage you are asking for a late-night chasing a simple spelling error, extraneous tab, or an extra semicolon. Kubernetes namespaces allow for the same secret to be defined in each environment, simple.

Accessibility

In contrast to Security, this is the least obvious benefit. However, anyone who has walked into a new project and found that the only documentation is the code will understand the need for consistency and clarity. This is also true for contracting consultants and developers. Those same people whose hands we need to keep out of the cookie jar also need to have access to build, test, and deploy with appropriate resources.

All these things are possible by implementing cloud-native apps that ingest secrets from the Kubernetes cluster as default, from the beginning. The perfect conditions do not, however, usually exist without a lot of forethought and planning.

A Note of Caution

Secrets management in Kubernetes is not perfect and should still be handled with care. Encoding something that is easily decoded, and leaving it in a public space, is a bad practice. Encoding is not the same as encrypting. Encoding simply translates a key, code, or certificate into something that can be decoded on the other side without sharing a decryption key. In Kubernetes, a base64 encoded secret can be decrypted by anyone who has access to get those secrets. Use RBAC and remove the access.

Everyone should be familiar with the idea of the crash bar, panic bar, egress bar, etc. As we will see in a moment, it is much like the latch on old refrigerators, its purpose is to allow egress when needed while returning to a locked state for ingress, without user intervention. Most of these doors need to remain closed so that the connected alarm systems do not set off a “held open” alarm. To avoid this, and to facilitate those times when the door does need to be unlocked, most of them have a way to “dog down” the latch. In most cases, you push down the bar, insert and twist a dogging key, and the door stays unlocked. Ideally, this system works well to keep the property safe while allowing exceptions. The problem is that the key, which is most often some sort of hex key, can never be found when people need it, and when they need it, they have to have it. That dogging key is placed, all too often, on the door jam right above the door it can dog down. All too often, this results in an unlocked door, in a space that is supposed to remain secured.

This is an excellent example of obfuscation without abstraction.

The Key Superglued in the Fridge Door

Old refrigerators had latches that sealed shut when the door closed. It was simply a solution that worked with the technology available. Even G.I. Joes had a PSA about the dangers of climbing inside and suffocating. Now, refrigerators have magnetic strips in the gasket, so it is easy to get out. The interim fix was to remove the latch, but add a key lock to the door of the fridge/freezer to keep the door shut, but not enable someone to lock themselves in. The key was seen as a needed item to control access and for protection, but it was not so big of a deal that anyone was worried about having the Sunday roast stolen. I am told it was not uncommon to glue the key into the lock.

When we started building infrastructure and rolling out usable applications/web pages thirty years ago we worked with what he had. There were no mainstream key management systems. Fast forward twenty years and some developers are still hard coding keys and database access into version-controlled code. Some will argue that they are the only ones with access and that nothing is exposed. How many times have we all heard the argument that switching would be expensive and incredible time-intensive? While one of the benefits listed above has been solved, accessibility, it has opened an opportunity for a security incident. Moreover, it forces the developer to change keys and codes as the applications move out of development towards staging, QA, and production. Worse, they sometimes use the same key for all their environments and occasionally contaminate databases with the wrong data.

The Compromise

Business constraints exist. While it would be great to go through and fix everything all at once, there are always other projects that will take precedence over cleaning up systems that already exist in production.

I did promise advice.

The Unmovable Developer

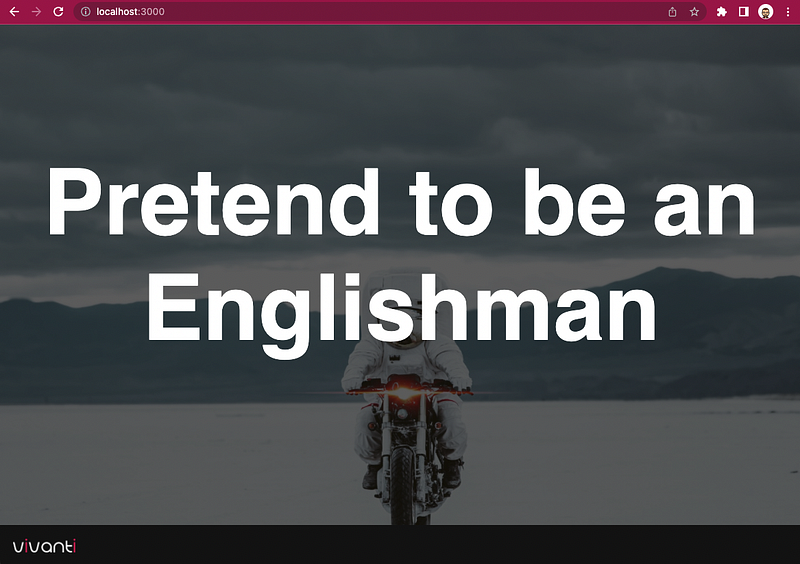

My boss, James Hunt, was all too happy to play the part of the stubborn, too busy to breathe, unwilling to re-code developer. In all reality I think he wrote this as a warm-up to the day with his first cup of coffee. His single-page web app called O-Fortuna was simple enough. Run the pod and visit the webpage to see random sayings. The kicker here was that we wanted to be able to change the contents of the file that contained the different fortunes, and we were forbidden to alter the container itself. The fotunes.js config file looks like this:

module.exports = [

'There is no cow on the ice',

'Pretend to be an Englishman',

'Not my circus, not my monkeys',

'God gives nuts to the man with no teeth',

'A camel cannot see its own hump',

]

The Starting Line

For clarity’s sake, I have included the original deployment. Feel free to visit the repository and dig more into the Dockerfile and Docker run command to see where this manifest comes from. For all intents and purposes, we simply created a pod (in a Deployment) to run the o-fortuna image.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: file-injecternitiator

name: file-injecternitiator

namespace: fortuna

spec:

replicas: 1

selector:

matchLabels:

app: file-injecternitiator

template:

metadata:

labels:

app: file-injecternitiator

spec:

containers:

- name: fortuna

image: filefrog/o-fortuna:latest

command: ["/bin/sh", "-c"]

args: ["node index.js"]

ports:

- containerPort: 3000

Works as intended.

Possible Options

At this point, there are several options to get the new information into the pod.

- We could make a configMap to replace the

fortunes.js, and for this example that would be… fine. However, as we are looking at these fortunes as being secrets, we would have neither obfuscation nor abstraction… fail - We could turn the new

fortunes.jsfile into a Kubernetes secret object by base64 encoding the entire thing. We would have obfuscation and abstraction, but we would have not accomplished making the deployment easier to edit, easier for a contractor to work on, simpler to push through development stages, nor would we have easy repeatability… fail - We create an init container to pull down the

fortunes.jsfile from the git repository and inject replacement variables into the file with sed commands before copying it over to the running container? While this may work for this specific instance, it is not repeatable and it is clunky.

Putting it All Together

Each of those options has good things going on for them, and if we combine them all, we get something useful. Reviewing our requirements, we want something that can be applied to a variety of applications to make use of Kubernetes Secrets Objects and 3rd-Party Secret Management. We want minimal impact on the existing project, with predictable results even in complex scenarios. We want to increase security, simplicity, and accessibility, and get our teams closer to best practices.

The configMap

The first step is to know WHAT we want to change and to prep that file. We know the format of the fortunes.js file, and we can prepare a version that has placeholders for future data. That file can be injected with a configMap.

apiVersion: v1

kind: ConfigMap

metadata:

name: old-file

namespace: fortuna

data:

old.file: |

module.exports = [

'${FORTUNE_1}',

'${FORTUNE_2}',

'${FORTUNE_3}',

'${FORTUNE_4}',

'${FORTUNE_5}',

]

The placeholders look like environmental variables, but neither the Node application nor Kubernetes will treat them that way… yet.

The Secrets

Next comes our new fortunes, ideally from a manager or team member that handles the encryption keys, etc. for the environment. I have taken the opportunity to base64 encode these ahead of time, but these could also be inserted as stringData.

apiVersion: v1

kind: Secret

metadata:

name: key-token-new

namespace: fortuna

data:

FORTUNE_1: VGFsa2luZyBhYm91dCB0aGUgd29sZg==

FORTUNE_2: VG8gc2hvdyB3aGVyZSB0aGUgY3JheWZpc2ggaXMgd2ludGVyaW5n

FORTUNE_3: QSB3aGl0ZSBjcm93

FORTUNE_4: VG8gcGxhbnQgYSBwaWcgb24gc29tZW9uZQ==

FORTUNE_5: 0JrRg9C/0LjRgtC4INC60L7RgtCwINCyINC80ZbRiNC60YM=

The Switcheroo

Variable-swap is a fairly self-explanatory image. It uses the envsubst command from the gettext package to replace all strings “annotated like variables” with the environmental variables passed into the container. Envsubst has the advantage over sed of processing the entire file at once, instead of once for each variable, and does not have to be changed for each deployment. My git repository has a great standalone example of getting the image running on Docker Desktop, or if you want to roll your own:

FROM ubuntu

RUN apt-get update \

&& apt-get -y install gettext-base \

&& apt-get clean \

&& rm -rf /var/lib/apt/lists/*

ENTRYPOINT ["/bin/sh", "-c", "envsubst < $OLD_FILE > $NEW_FILE"]

An important distinction needs to be made here. Neither Kubernetes nor Node.js saw the contents of the new fortunes.jsfile as having variables. envsubst looks for anything that looks like a variable, and then if there is an environmental variable that matches, it will then do the substitution.

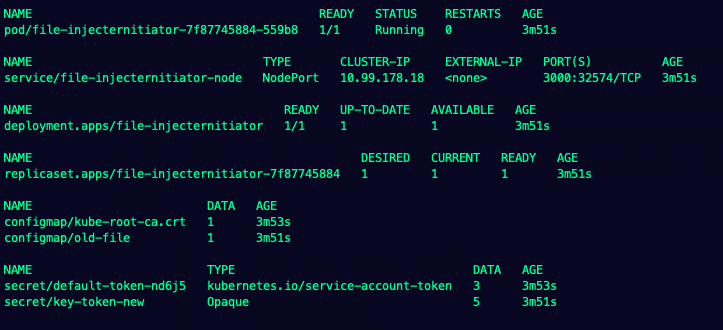

The Deployment

The final deployment can be seen put together in the file-injecternitiator git repository. The first thing to notice is the minimal change to the original container. We added a new file and copied that file to its final location.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: file-injecternitiator

name: file-injecternitiator

namespace: fortuna

spec:

replicas: 1

selector:

matchLabels:

app: file-injecternitiator

template:

metadata:

labels:

app: file-injecternitiator

spec:

containers:

- name: fortuna

image: filefrog/o-fortuna:latest

command: ["/bin/sh", "-c"]

args: ["cp /new_file/new.file /fortunes.js && node index.js"]

ports:

- containerPort: 3000

volumeMounts:

- name: injected-secret-volume

mountPath: /new_file

initContainers:

- name: variable-swap

image: tomvoboril/variable-swap

env:

- name: OLD_FILE

value: /old.file

- name: NEW_FILE

value: /new_file/new.file

envFrom:

- secretRef:

name: key-token-new

volumeMounts:

- name: injected-secret-volume

mountPath: /new_file

- name: old-file

mountPath: /old.file

subPath: old.file

volumes:

- name: injected-secret-volume

emptyDir: {}

- name: old-file

configMap:

name: old-file

Next, we can see the implementation of the variable-swap image as an init container. We can see our secret object from above injected as environmental variables. An initContainer must complete before the other containers can start. It can be difficult to diagnose initContainers if you do not use the — all-containers flag when looking at logs.

We did not use an initContainer that had kubectl installed and a service account to inject the secrets directly into the run container from the initContainer. That would have increased complexity without increasing security. Arguably, that would have decreased security by possibly exposing the variables to the node host with process snooping.

We also used shared emptyDir: {} instead of a hostPath or persistent volume. This is, again, an attempt to simplify the cleanup of data and not expose secrets any more than necessary.

initContainers:

- name: variable-swap

image: tomvoboril/variable-swap

env:

- name: OLD_FILE

value: /old.file

- name: NEW_FILE

value: /new_file/new.file

envFrom:

- secretRef:

name: key-token-new

volumeMounts:

- name: injected-secret-volume

mountPath: /new_file

- name: old-file

mountPath: /old.file

subPath: old.file

Finally, we can see where our “old” file is injected with a config map in the volumes section of the yaml file.

volumes:

- name: injected-secret-volume

emptyDir: {}

- name: old-file

configMap:

name: old-file

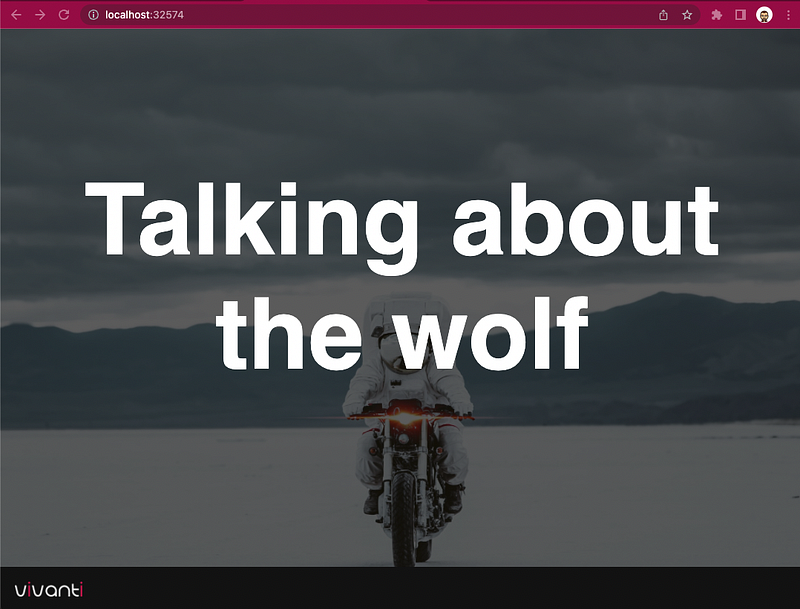

New fortunes!

Everything Running Beautifully.

Thoughts and Considerations

⎷ More Secure?

⎷ Simple to modify and deploy changes?

⎷ More Accessible to other Developers?

This is also not the place to STOP improving. Sometimes making a big step is not feasible because of time/budget/infrastructure/personalities/internal politics, but those should not be the reason to avoid taking small steps. We would love to see all our clients have perfect environments that are free from hurdles, foibles, and holes, but sometimes it is more important to start building better habits than it is to make a single big change that doesn’t stick and doesn’t permeate through the company’s culture and processes.

No comments:

Post a Comment